I don’t normally care that much about the titles of my articles, to the point where this is one of the leading complaints about my work. “How dare he?” says the HackerNews Commenter1, noting that there are some small irregularities in my article title about an absurdist football novel featuring both Tim Tebow and an ancient, hidden techno-utopia who airdrops Happy Meals and The Wire DVDs into the Canadian wilderness.

I haven’t suddenly started caring about titles a lot more, but I did work much harder on this one; I even went through multiple drafts. This industrious betrayal of my slothful nature was motivated by one thing and one thing alone: I wanted to make sure you didn’t read it as Why I don’t like EA: A justified list of good reasons to hate the effective altruists that I’m already pretty sure about.

I don’t have that kind of confidence. What I do have is a boiling desire to hate Effective Altruists, a group that is at least nominally about giving away a lot of money to various charitable causes while ensuring the cash is used efficiently in a way that maximizes the good the funds can do. There’s no clear reason on a superficial level why this should be so, and it is superficially that I know them. I am not an expert on the movement.

And yet I hate them on a deeply primitive, reflexive level. I wish to destroy their camps, salt their fields, and displace them from their place in history. I wish to burn their grand palaces to the ground. I wish to topple the mighty statues of their foreign gods and kings. I wish them to be known to future historians only to the extent they can be extrapolated from the shape of the crater they left behind.

Why? I have no idea. If you asked me why I don’t like the completely unrelated walkability movement I could easily tell you that it’s because they broadly pursue policies that would make driving places harder and more time-consuming, while I want the opposite. If you asked me why I don’t like people who are really into the precautionary principle, it would be that they’ve overapplied it and (probably) killed untold millions of people.

My dislike for EAs isn’t that; it comes from a deeper more lizard-brain kind of place and not the well-considered math-driven parts of my brain. But something must have triggered this, right? I’m not alone; fully half of Twitter hates them, too, for reasons that appear about as deep as mine. Something must be triggering this, not just for me but for a lot of people. There has to be a metaphorical first domino somewhere that starts this chain reaction.2

I’m not really allowed to hate people in the first place, I’m pretty motivated to find out what the root cause is. Fair warning: This article is me trying to figure something out for myself; it’s probably not going to end up becoming the go-to definitional piece on EAs for all eternity.

Where does the money go?

The first and most obvious cause of my dislike would be if EAs, as a whole, were spending the money on useless or counter-productive things. Since “stupid way to spend the money” is a concept that relies on some standard of “good way to spend the money”, the easiest way to get started is by looking to see where the money actually gets spent.

Note that if you are looking for a deep-dive quant analysis of whether or not EA money is actually accomplishing the goals they state, I’m not your guy and this isn’t your article. This is partially because I’m not quant enough to actually handle this well and get you numbers you can trust, but it’s more because this wasn’t information I knew when I started disliking them.

Wherever my instinctive dislike is coming from, it’s not a data-driven place, because I simply don’t know the data to answer questions like “are the mosquito nets working to stop malaria as expected”. I suspect this is true for some significant percentage of the other people who don’t like EAs, in that they dislike them for more qualitative reasons that would persist even in the face of strong evidence the EAs were doing a lot of good.

I’m going to draw heavily from EffectiveAltruisim.com’s impact article here to define the “where the money goes” concept here. Yes, I’m aware that’s probably not representative of all EA spending, but they are a behemoth in the EA world and very likely where most new EA-minded people first get an idea of what the movement is. Broadly, they think the following things are important:

World Health Stuff

If you were inclined to explain EA to someone as a positive force in the world, this is probably where you’d start. The basic concept is pretty simple - the EA looks at a dollar you give to a homeless guy and says “At best, this is going to buy him a McDonalds parfait or something. But there’s places in the world where a surprisingly low multiple of 1 USD can literally save a life.”

Essentially the thought here is that wigs for cancer kids are pretty nice, but those wigs cost like $1000 to make; if it costs about $5000 to save a life, are you comfortable saying that five affluent-by-global-standards kids’ self-esteem is more valuable than one kid’s continued survival?

EAs build on that philosophy by giving money to charities pursuing goals like global deworming initiatives, malaria bednet distribution, and alleviating global poverty. We could argue about whether or not the last is particularly doable for a movement the size and shape of EA, but I think it’s a moot point; if this kind of stuff were all EAs did and the only thing they talked about, virtually nobody would dislike them.

Nobody likes death, and while “let’s use the money to save literal lives before we do much else” isn’t an absolutely unassailable position, it’s pretty defensible nonetheless. Wherever my problem with them is, it isn’t here.

(Note leading into a longer footnote: It has been pointed out to me that not enough words were spent here indicating how much EA focuses on this one issue. According to this slightly outdated document, it’s about 45% of what they do in terms of cash-sent-out.3)

Animal Welfare

Here’s that sweet controversy we all crave.

For the purposes of understanding what’s going on here, I’m going to assign you a slightly-inaccurate-but-useful assumption: For the average person who signs up for stuff like EAs, math occupies the same functional throne that a deity would in a divine command theory system of morality. Where math tells them to do something, it would be wrong for them not to, no matter how much their instincts might initially push against it.

Many EAs assign animal life some fraction of the value they would humans. Since there are an awful lot of animals (more than several, by some estimates) and animals are pretty easy to please, that ends up meaning that EAs spend a lot of money on animal welfare.

I’m using “oh, those crazy hippies” tone here, but this isn’t entirely outside of the bounds of most people’s morality, whatever the system. If by spending $1 you could ensure that all birds in the world were able to get enough food and had fun little puddles to play in whenever they wanted, you probably would. That’s because very few of us assign a value of 0 to animal happiness.

Where EAs differ is in trying to quantify that and weigh it against human life, but even that’s not unique - remember that we had animal welfare organizations well before this movement existed. Their deification of math makes them get a little weirder with it than most (see: trying to figure out ways to optimize the lives of even wild animals), but this isn’t coming from nowhere.

I explain all this because it’s important to know the pathway they take to get where they go - if life is good, that includes animal life, they would say. Even if animal lives are worth a fraction of human lives (and not all EAs agree this is so), sufficient animal lives would still outweigh an individual human life.

I think this is important to keep in mind when you notice that the organization that got you in the door with malaria-and-bednets is suddenly talking about how much money they dump into subsidizing companies trying to make fake meat out of soybeans and the souls of barley grains that died with significant regrets.

I suspect some amount of the people who strongly dislike EAs might dislike them a little less if they saw the mental journey that gets them weird places as opposed to just the destinations they reach.

That said, sometimes even the most logical of journeys fail to let you completely dismiss your dislike of a destination. Given that EAs are sometimes (in practice) going to ask you to let a kid in Somalia starve to fund research on how to keep wolves from eating rabbits, this might be one of those times for some.

Existential Risk

What’s worse than nearly everyone dying? Not much, say the EAs. On the premise that an ounce of prevention might be worth a pound of not-being-exterminated, they dump GDP-of-a-small-country piles of money into heading things they see as existential risks off at the pass.

In terms of things EAs see as big enough risks to try and prevent, probably the most normal are pandemic and nuclear war prevention. Whether or not they do much to actually stop these is again beside the point (because, again, I didn’t know whether or not they were effective at it when I started not liking them), and as things-everyone-doesn’t-want entrants they are pretty strong. As with malaria nets in the first category, I think if this was all EAs included in their stuff-trying-to-kill us basket nobody would really disapprove.

More controversial is the near-universal EA fear of AI. In the EA-sphere, it’s very common to believe that artificial intelligence is very close to getting to the point where it can improve itself without human intervention, and that once this happens there will be few (if any) roadblocks in the way of an almost immediate amplification of the technology to deity-like levels of power.

Their fear of this outcome is deepened by the near-complete lack of people actually actively working to make advanced AI who agree with them. Of all the things EAs dump money into, this is the most clearly pointless. There’s thousands of companies working on AI in some way or another, none of them are listening to EAs, and only some of them are even in portions of the world where EAs have any sway at all.

That said, AI is functionally only a portion of what EAs do; there’s entire orgs that focus on only that, but they are by no means the only orgs in the movement. But being one of the big, splashy, visible parts of the movement, it ends up being one of the primary things people fixate on when they think about EAs.

Since I’m trying to pin down why I don’t like EAs, it’s worth stopping here to note that none of this stuff is it. Like, yeah, sure, I don’t care nearly as much about factory farm animal welfare as they do. But unless they are going to do something classically hateable like “use lobbying to enforce their weird will on others” regarding chicken-husbandry, there’s no reason for me to care.

Besides some portions of the existential risk stuff, the rest of it is stock-and-trade charity stuff; a fair amount of us didn’t have a problem with Bob Barker wanting to control the pet population, and shouldn’t have a problem here really. I say that while ignoring all the “but, what if they do it weird?” questions that occur to me because deep down, I’m also aware that I’m only looking for those to justify my dislike, which is almost the opposite of the point of this exercise.

Political stuff

Effective altruism is a center-left movement. It says it isn’t; you can’t prove it is. But, like, they are very into solving climate change and willing to spend huge amounts of money on it. This is probably normal-sounding to my non-rationalist readers, but EAs are more or less the giving arm of rationalism. Rationalism at least somewhat leaves open the possibility that the implications of climate change might end up limited to a pretty small effect 100 years from now.

If you just disagreed with that last minimalization of global warming hard - like real hard in your gut - that’s probably a pretty good indication you voted Biden in the last election. That’s not a problem, I’m not saying that’s bad, I’m just saying you are a Democrat. No big, but relevant because EA’s also by-in-large disagree with it really hard for reasons which are very similar to yours, whatever they may be.

Earlier we talked about the animal welfare wing of the thing, so now note that anything a strawman EA decides is good is essentially something that stood a chance of being considered bad or neutral, as well.

Animal suffering isn’t an insane stretch for a place to stash charitable money, but note that someone (or a critical mass of someones) had to propose it as a good worth spending on, and then keep up on that until it got standardized as one of the big, normal EA causes. EAs being California vegans probably did help a lot with this.

Nuclear power is the kind of thing that utilitarians love - chemophobics hate it but it’s arguably a great alternative to everything else that you can counter-culturally argue for and look like the smart guy in the room. EAs broadly spend no money lobbying for this that I can tell, despite being dead-serious worried about greenhouse gasses, as previously mentioned.

None of this is bad in and of itself; I’m not arguing you should believe the opposite of all these things here. But if I told you “there’s a guy who seems like he might be a vegan, he dislikes nuclear power because it’s icky despite being able to do the math to tell it’s a pretty good option most of the time, and he puts a lot of his income towards stopping CO2 emissions” you’d probably guess that guy voted a straight left ticket. That’s the guess I’m making here and the reason I bring all this stuff up.

I’m more-or-less conservative, but that doesn’t mean I more or less think the other side is blanket wrong about everything, or that even where wrong they are stupid in how they are wrong. But in a discussion about why I kneejerk-dislike a group, my knee-jerk motivations are very relevant, and tribalism is not something I’m immune to. Culture is hyper-relevant to that, and this is a very different culture from the one I’m in.

I do want my perceptions of EAs to be falsifiable here in some way. I want there to be a way for someone (maybe me!) to say “hey, these people aren’t who you think they are, it transcends politics/normal culture in principled ways”. If my perception is mainly built off things like this, which proposes a list of harmful speech types which boil down to “things that aren’t vegan or DNC party plank positions”, then I should probably be spending time looking for counterpoints.

At the risk of being too “get in the comments!”, I’d like to know if you have noticed times where EAs as either individuals or organizations have acted in ways counter to what a normal democrat would want, especially where based on some explicitly stated controlling EA principle. Let me know.

Actual straight-up politics

At some point, EAs decided to fund an entire political candidate. Scott Alexander described him thusly:

2: The effective altruists I know are really excited about Carrick Flynn for Congress (he’s running as a Democrat in Oregon). Carrick has fought poverty in Africa, worked on biosecurity and pandemic prevention since 2015, and is a world expert on the intersection of AI safety and public policy (see eg this paper he co-wrote with Nick Bostrom). He also supports normal Democratic priorities like the environment, abortion rights, and universal health care (see here for longer list). See also this endorsement from biosecurity grantmaker Andrew SB.

Although he’s getting support from some big funders, campaign finance privileges small-to-medium-sized donations from ordinary people. If you want to support him, you can see a list of possible options here - including donations. You can donate max $2900 for the primary, plus another $2900 for the general that will be refunded if he doesn’t make it. If you do donate, it would be extra helpful if the money came in before a key reporting deadline March 31.

Which at first seemed to me like a big movie-twist reveal; here was a charitable group that had finally shown its true colors by spending rightful mosquito-net money on advancing their preferred partisan politics. It was then pointed out to me that Flynn’s campaign was in a district heavily expected to break democrat anyway; since Flynn would be displacing another who was different only in terms of EA alignment, they argued that it wasn’t partisan at all.

I think that leaves the best-case scenario as something like “EAs are involved in politics only in the sense that they give money to candidates based on their support of EA policies”. If true, this lets them essentially argue that they are leveraging a small amount of money (still millions) to get someone in place who might create a percentage improvement on how we spend our national money (billions or trillions). If so, this could arguably be a net gain from their perspective.

A worse-case scenario would be one where a similarly EA-aligned republican candidate ran, but got no support due to party affiliation (thus revealing EAs motivations to be more partisan than argued again). A step worse from that would be if EAs came to consider the republican policy to be bad in general, and from that assumption codified a focus on promoting DNC candidates to oppose them.

But since neither of these things has happened and since I’m a pretty staunch supporter of not punishing people for things they haven’t actually done and might not do, this can’t be a driver of my dislike (or can be, but only in error).

Might “pour millions of dollars into a campaign” end up proving to be a bad bet that produces minimal positive impact by stated EA standards? Sure. Is it possible that EAs end up devolving into a political fund biased towards the party they would have preferred even in the absence of their own movement? Possibly. But it doesn’t seem to have happened yet, or at least provably so, so I can’t hold it against them yet and still pretend I’m fair.

Being pretty sure they are correct about most or all things

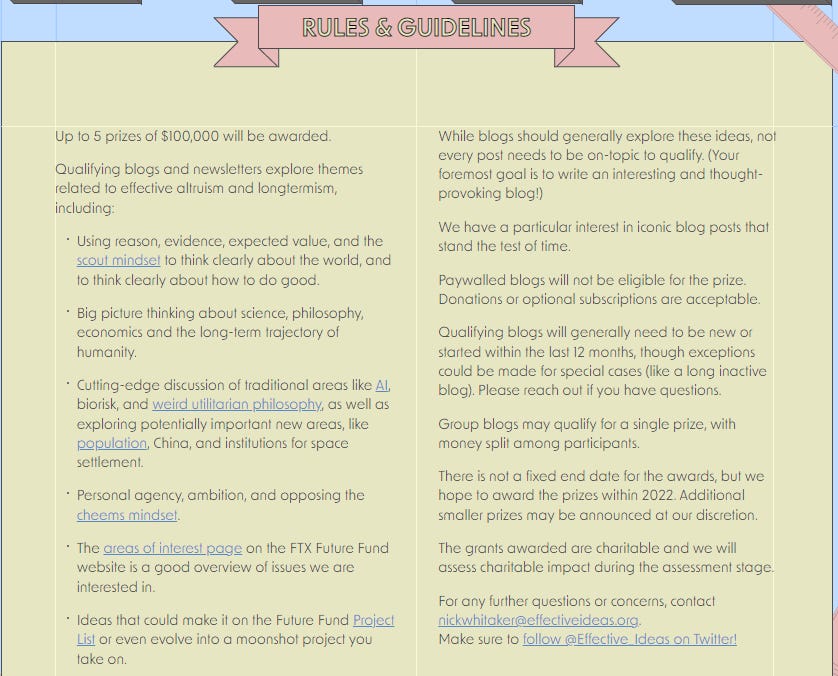

The EAs once ran a blog-funding grant/contest of sorts. For context, this was something I would never have been eligible for in any case due to the age of my blog, which I think means what follows from here probably isn’t sour grapes. The general “how to win” document looked like this:

Here was a prize for innovative, worthwhile thinking where both things were defined as “Agreeing with and boosting things we already think are correct”. Everything from moral philosophy to personal mindset to the exact list of important issues was covered; you’d have a good chance of winning if and apparently only if you agreed with EAs on 100% of things.

Note that this job already has various names ranging from “marketing guy” to “PR flack”. Now, is it wrong to hire either? No, I don’t think so - organizations are allowed to promote their own aims. But something about the framing here is weird; it’s a situation where an organization wants to pretend to promote new thought by saying things like this:

We’re eager to nurture the next generation of public thinkers, and support a flourishing discourse on the most important issues.

There is a burning need for new ideas, new arguments, new people, and a more lively public discourse around effective altruism—how to use reason to do good and how we can help our civilization flourish in the long run.

But has defined the acceptable range of thought to mostly preclude that in any situation where it doesn’t fit with a previously decided-upon definition of correct.

This instinctually strikes me as really wrong for reasons I’ll explain in a bit, but it would be wrong to not mention that it’s not like the EAs are defying some long-standing principle here. My mental list of organizations I know of that give out six-figure prizes to people who they fully expect to undercut their messaging sits firmly and consistently at 0 entries. Where the EAs differ here they differ by giving out a grant this size at all, not by giving it out in violation of some long-standing tradition.

Next story: At some point, the EAs decided to run a contest with a $20k grand prize for the best criticism of the Effective Altruism movement they received. As was the case with the blog prize, they included a how-to-win criteria list, and this was the first entry on it:

Critical. The piece takes a critical or questioning stance towards some aspect of EA theory or practice. Note that this does not mean that your conclusion must end up disagreeing with what you are criticizing; it is entirely possible to approach some work critically, check the sources, note some potential weaknesses, and conclude that the original was broadly correct.

Yes, that’s right: this contest dedicated to constructive, substantial criticism of EAs is fully winnable by someone who writes an essay detailing how they looked very deeply into all the available evidence and finds that EAs are great in every way.

Is this bad? It’s weird, at least. Imagine asking someone to give you honest criticism about ways you can improve, and then including “and, listen, if you really can’t think of any, I’ll still accept you spending a lot of time and effort telling me how great I am” in the ask. It speaks, as the kids say, to mindset. That same flavor imbues several different parts of the document:

Fact checking and chasing citation trails — If you notice claims that seem crucial, but whose origin is unclear, you could track down the source, and evaluate its legitimacy.

Clarifying confusions — You might simply be confused about some aspect of EA, rather than confidently critical. You could try getting clear on what you’re confused about, and why.

Minimal trust investigation — A minimal trust investigation involves suspending your trust in others' judgments, and trying to understand the case for and against some claim yourself. Suspending trust does not mean determining in advance that you’ll end up disagreeing.

Outs are littered everywhere; escapes from actually criticizing them are omnipresent. But it’s weird, right? They are paying $100k total to have a contest with the stated goal of giving them criticism with which to improve themselves. If they end up getting a document that plays at being critical and then ends on a rah-rah everything turned out great so stay the course heroes note, what was that money for? A lot of people are already abstaining from offering criticism of EAs for free.

This kind of stuff informs my general impression of EAs more than anything else; there’s this sense that they are pretty sure they’ve got it all figured out to the point where all that remains is optimization on small points. On all the big ones, they are confident in the way that makes them seriously worry nobody will be able to find any way to criticize them.

To put it another way: it’s entirely possible (if unlikely) that the post you are reading, one in which is a guy working on figuring out ways to like and be less critical of EAs, could win that give-us-criticism contest if it was entered. But it shouldn’t, and any mindset that leads to the possibility that it may sits slantways to a healthy reality.

If this is a weird article, and I suspect it is, I apologize. It’s a product of a few weird things working in tandem:

I’m an inherently hateful, angry person. I spend an enormous amount of energy keeping this under control. There are people I love, and I love them a lot; there are people I like, and I give them a lot of my time. But there are people I hate, often for little or no reason. This doesn’t seem good to me, and I want to figure out ways to do it less.4

I’m culturally aligned against people who think they can figure out every single thing on a spreadsheet. I like Excel as much as anyone else, but I often think that things that intersect with the social don’t lend themselves well to mathematical analysis. When a movement like EA shows up and goes “Hey, we figured out pretty much everything - it’s utilitarianism and spreadsheets all the way down”, it raises my hackles and triggers 1.

EA is a big, diffuse movement; it’s hard to know every face of that particular Torah. I’m mostly criticizing one particular arm of it here. From experience with a previous article on walkability (in which I focused on one particular org prominent in that world), I know that a lot of complaints rightfully pointing out that I’m not seeing the whole picture are on their way. That’s not wrong, but it’s also unavoidable; there’s no way to criticize the whole thing at once.

Thus you get an article where an increasingly confused man points out a bunch of weird, vague problems he has with a weird, vague organization while at the same time pointing out that most of them are illegitimate.

I have a friend who has worked for a few pretty big charities; if you made a list of the 20 biggest charities you know about, you’d probably hit on one or two of them by accident. We were once talking about the amount of money his (at the time) employer spent on advertising, and whether or not it could be better spent in other places.

He let me know that he thought a lot of those criticisms were slightly off the mark. Yes, he said, the money they spent on advertising didn’t go to their goal, but it also brought in a great deal of new money; the positive ROI meant a net gain for the people they helped.

He allowed that he’d still be inclined to agree with the criticism if charity was a zero-sum game where only a set amount of dollars could be drawn towards charitable giving in general, but his experience was that most of the money their advertisements reached was “new” in the sense that it otherwise wouldn’t have been in the game at all.

My impression of the EAs is influenced by that. I like very, very little about them instinctively; the movement makes me uncomfortable in ways that approach revulsion. But at the same time, here I am trying to pin down a better reason why than “they don’t seem very much like me, actually” and failing to do so.

As with my friend’s “new charity money”, the EAs are broadly working on converting a group not specifically known for their giving (zillionaire techies) to regular payers of a secular 10% tithe. Even if I have valid criticisms (and amongst all the mess I’ve written today, there probably were cumulatively a few), they would need to be pretty big to offset the hundreds of millions of dollars they’ve pumped into doing good.

To put it another way: If I was to criticize them, a good counter-criticism would be to ask me what I’ve done this week that helped anyone. I’ve maybe done a bit, but I haven’t pumped the GDP of a small country into clean water projects. That doesn’t seem sufficient to give me a lofty perch from which to cast down dispersions, especially when doing so might demotivate them to do the charitable work I’m neglecting.

Time will show whether or not they are able to avoid the trap of considering themselves infallible or if they are able to avoid becoming an explicitly political organization as opposed to one that is merely implicitly so. But until such time as they give me a better reason to dislike them, the problem of that dislike appears to sit squarely in my court, not theirs.

Very honestly I like my HackerNews derived audience a lot; most of them that I’ve talked to have been great folks. But as a site, it’s unique in that commenters there will say “Regardless of what the content here is, I have opted not to read it because of either some small perceived error in the title, or becaue I don’t like the title of the blog and feel I can tell a whole lot about what the article is from that.”.

This is hypothetically an even bigger deal in situations where they don’t deserve the hate in the first place - then I shift from “unjustified hate” to “unjustified hate for someone who doesn’t deserve it”, which seems a much worse transition than the moderated language in those quotes indicates.

There’s always an unresolved element when you talk about things like “What does this organization do, and what is it about at a fundamental level?”.

I once was talking to a friend about planned parenthood. He was saying something like “Listen, they do an awful lot of things, of which abortion is just a small piece; I object to them being called an abortion clinic in a full-stop way like that’s who they are.”

And, yeah, he has a point. But at the same time, if I was talking about a group and said “Listen, they make wigs just like locks of love; that’s 99% of what they do. It’s not fair to zoom in on the 1% difference of them gathering once a year to sacrifice a bunch of rabbits to a VHS copy of Mystery Men.”. We can sort of argue about whether or not that fixation on rabbit sacrifice is fair, but we also shouldn’t be surprised by it.

EA strikes me as a combination of the two situations. An awful lot of what they do is “give money to improve health around the world” with an emphasis on doing this well/effectively. This should absolutely not be ignored. But at the same time, nobody else is doing nearly so much work to prevent killer robots, and nobody else’s movement has a relatively common belief that eventually we should overbreed and overpopulate on purpose to the point where every living person is mostly but not entirely miserable.

These things are unusual enough that you can’t not talk about them, and you also can’t necessarily keep it from being what’s notable about the group. But it’s also unfair to not note that, hey, like 50% of this group’s efforts are mostly stuff you should be OK with.

I got a warning from a beta-reader that this statement might one day be used against me in the court of public opinion, which is potentially true. That said, I don’t think that people can go around talking about tribalism and partisanship and a dozen things that boil down to “I hate this group” without acknowledging that reflexive hate of dissimilar people isn’t really all that rare.

I’d much rather acknowledge faults and try to fix them than pretend I don’t have them and lose all incentive to do so, if that makes sense. And that’s before we even get into the various ways my religion interacts with this, which are significant in their own right.

very ratsy of you to overintellectualize and try to find rational reasons for your feelings. to me the post reads more like "why ea is imperfect" and less like "trying to figure out why I don't like them", as it doesn't really have much of a self psychoanalyzing one would think is necessary to understand one's feelings. this is gonna sound weird coming from a stranger online.. but, really, "I'm inherently angry and hateful person" => "I have unresolved issues and would benefit from some good therapy and deeper understanding of my emotions" sounds like a no-brainer implication to me. sure, call me a member of a therapy cult. ignore me if this doesn't sound appropriate, meant this as an advice, not an adhominem.

not surprisingly from that "your feelings are your feelings and have little to do with reasons" stance, I mostly agree with your observations/characterizations, and it doesn't trigger me at all.

trying to understand your feelings a bit, sounds like part of it might be disappointment/disillusionment: "I thought they are knights in shining armor but turns out they are leftie california vegans", or maybe even "I thought they are knights and wanted to join but then I found vegan cows corpses in the basement".

Next stage is acceptance: yeah, their sociology is a mixed bag, and they have plenty of vegans and environmentalists and even some outright jacobin socialists under the umbrella - so what, compared to how pointless and inefficient a lot of charities (or government spending on education or health, for that matter) are, would argue - and I think you won't even disagree? - that EAs are net good, RCT-validated 3rd world cheap but effective interventions are a good idea (though you could argue re how much prominence it deserves), popularizing earning to give is mostly good, some attention to existential risk is not bad (though one could argue it's so hard a pursuit as to be mostly pointless).

ditto re self-advertising and overconfidence: again, it seems what you're doing is first setting an unrealistically high expectations, and then get angry over EAs not meeting them. like, you don't hate on domino's ad not being entirely even-handed and self-aware, are you? or that St Jude's (https://twitter.com/peterwildeford/status/1618739307447042048) is not being fully transparent in their ads and doesn't include RCTs or QALYs calcs?

in my model of the world, every org is to some extent dishonest and puts its own interests first, and isn't expected to self-incriminate, is allowed to promote itself, is expected to put the best spin on what it's doing.

EAs are not perfect but again, if you apply realistic standards and not some expectation of perfection, think realistically they aren't too terrible? more transparent than many, more willing to engage with external criticisms to at least some degree, and not say shush or ignore or viciously attack back like many other entities would.

or is it your context you're railing against? like, in my own backyard of NYC postrats, a lot of things you say are kinda common knowledge, rat vs EA split is deep and goes far back. is your community much more crazily into EA?

Here is a simple explanation about EA: it is mostly drafted by well-intentioned moralistic nerds who have no real social skills, and then being fortified by manipulative narcissists wanting to turn this into a cult. The narcissists uses nerds as meat shield against criticism, and nerds are not aware yet since they are socially tone-deaf. This is of course familiar to subcultures who endured drama of jocks and "normie" infiltration. https://meaningness.com/geeks-mops-sociopaths https://archive.ph/OvMnA

> spending the money on useless or counter-productive things

A classic reason why nerds do this, it is what Yarvin noted as "telescopic philanthropy" that happened about two centuries ago, and that nerds (and women) with too much money on their hands become crass with it, and think that most problems can be handwaved with money and idealistic aid. Of course two things happen: the further you are from the organization using the money, the more likely it is to be misused ("a fool and his money are soon parted"); and that the money lost will almost always be used in a nefarious way by manipulators, especially when socially naïve nerds have weak conceptualization of "the right people" than the working class. https://graymirror.substack.com/p/is-effective-altruism-effective

> Even if animal lives are worth a fraction of human lives (and not all EAs agree this is so), sufficient animal lives would still outweigh an individual human life.

Case in point, THIS provides a good example as to how things go wrong. Firstly, the value of animals don't scale the same way as people do. For animals, since they often reproduce abundantly, and also die quickly, the cost of saving one more of them may be slow, but the value of saving one more them is also low. Unless there is a Costco or Aldi for environmental aid, no thanks. For humans, since everything we do in our social life follows Metcalfe's Law (no matter it is Facebook, Bitcoin, or your local community), having one more person on earth means a value increase relative to the current population size. https://archive.ph/uNVuW https://archive.ph/FexBS

Inherently humans are distinct from animals in this regard, and nerds are often tone-deaf about this since they are more left wing (right wing geeks please calm down for a second). They are more likely to be heterophilic (the opposite of "xenophobic" in its literal sense), and prioritize acquaintance over close friends, and that when nationhood is controlled, they disproportionately prefer earthly ecology and sometimes aliens. Weirdly enough liberalism is also tied to excessive verbosity and cultural-political participation relative to ones ability to reason and think scientifically, as demonstrated by the "wordcel" phonomena. https://www.nature.com/articles/s41467-019-12227-0 https://emilkirkegaard.dk/en/2020/05/the-verbal-tilt-model/ https://emilkirkegaard.dk/en/2022/02/wordcel-before-it-was-cool-verbal-tilt/

> Their fear of this outcome is deepened by the near-complete lack of people actually actively working to make advanced AI who agree with them.

Yarvin again dissected "AI risk" to its core, and that a lot of people miss: ANY AI needs to be fed by human behavior, as soon as there is a "human data strike" no AI can ever be as strong as these sci-fi lovers can make them. Another problem is that for AI to truly be sci-fi level powerful, it must first control wetware and meatware (brain and body) in some predictable but intrusive way. The problem in this line of through is that humans are valuable in its ability to "be free", and that lack of freedom is itself a type of malfunction. A smoking motherboard after overclocking is the same as a human "getting smoked" by any system, no matter how cultish or idealistic it is. https://graymirror.substack.com/p/there-is-no-ai-risk https://graymirror.substack.com/p/the-diminishing-returns-of-intelligence

Another way to see these "AI risk" issue, is that every trap of the AI is equivalent to some human problem. Roko's Basilisk (retroactive punishment by force)? Cancel Culture. Paperclip Maximizer (blind achivers)? Burnout culture and ROI-centric modern economics. Alignment Problem (not letting the AI "hack it")? Problems of middle management. It seems that nerds never see organizational and systematic problems as itself, and encode it into AI thought experiments. Kind of a coward's way of whining about how much the office sucks and wants to be paid to give bad advice instead of fixing it... come to think of it, this is exactly how "investment gurus" get people to buy their products that seem to solve a problem, only to realize it is a half-way attempt at explaining its intricacies. Silicone Valley angel investor mindset. venting: this is now The Menu (2022) but replace food with AI tech. https://archive.ph/Ar5Sx https://archive.ph/WwenP

> Rationalism at least somewhat leaves open the possibility that the implications of climate change might end up limited to a pretty small effect 100 years from now... Nuclear power is the kind of thing that utilitarians love... EAs broadly spend no money lobbying for this that I can tell...

See previous notes. If these liberal types are just genetically-wired tree-huggers we won't have problems, but they should be honest that it is emotionally biased, and not purely based on rational judgement, either at the front of greenhouse gases OR ecology protection. But then again it is likely that they don't speak out at the moment since nuclear risk is of real concern, and war-proof alternatives like thorium and fusion is still on the horizon. Is it possible that these nerds might be either defensive against jocks (manifesting as theory of disarmament), or that they worry they will emotionally snap and metaphorically "shoot up the school" given enough pressure (manifesting as madman theory and brinkmanship)?

> Here was a prize for innovative, worthwhile thinking where both things were defined as “Agreeing with and boosting things we already think are correct”... this contest dedicated to constructive, substantial criticism of EAs is fully winnable by someone who writes an essay detailing how they looked very deeply into all the available evidence and finds that EAs are great in every way... Outs are littered everywhere; escapes from actually criticizing them are omnipresent.

In essence their organized route of "steelmanning" (to harden or change ones argument to be robust) is devolving into some kind of braindead mental exercise. Steelmanning is always lit af, but this classic playstyle, similar to committee games from Yes Minister, is the martial arts equivalent to the Chinese master who can't even take a punch from an amateur boxer, and cries over how the rites have been broken and how it is "unfair". They are better off reforming them into a more modern style like what Bruce Lee did (e.g. Yarvin's Effective Tribalism proposal). At this point they are either spineless idiots, or that they are only doing this to be a liberal ceremonial equivalent of a Tithe (or a Fung Shui master paying a visit). https://archive.ph/y1poc

> it’s entirely possible (if unlikely) that the post you are reading, one in which is a guy working on figuring out ways to like and be less critical of EAs, could win that give-us-criticism contest if it was entered.

The only to win while showing them a bit of humility, is through the Red Pen game. Yes, Slavoj Žižek is the joker, and we live in a society, harhar. But what are they trying to speak without uttering the words directly? What is the main thing outside of the spreadsheet of the Tyranny of Numbers? "I am a brainy idiot being held hostage by narcissists, and we are starving!" Okay, outside of this west-coast prison for nice guys is the Nevada dessert. Do you have enough willpower and street-smarts to walk out? https://newcriterion.com/issues/2015/3/in-praise-of-red-ink http://www.maxpinckers.be/texts/slavoj-zizek/ https://thomasjbevan.substack.com/p/the-tyranny-of-numbers